(Here, it can help to look back toward the binary representation, where we take the least significant two bits.) 7 goes to block/word 3, 10 goes to 2, and so on. Once we have that, we can see the access pattern clearly. The main difficulty is in translating to decimal: 7, 10, 2, 10, 7, 4, 4, 10, 4, 2. It's an exercise in how well you understand binary code, not how well you understand caches. This is why the question gives you the sequence of addresses being accessed in binary code. This means that each word is independent: accessing and replacing one word has no effect on any adjacent word. The 16 byte cache holds four blocks, and four words. The example you are asked to solve is even simpler, because the block size is equal to the word size. That is what direct mapped means: there is no set there for an associative lookup. When a memory access occurs, the cache maps the address to a block. That is the main reason such a thing would ever be used.

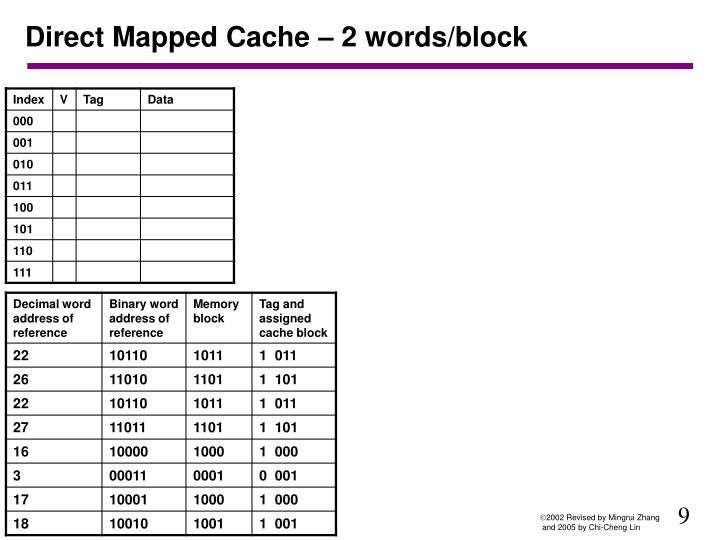

Thus, the hit rate is 4/10 = 40% in this example.įirstly, in a direct mapped cache, there is nothing to search for. Each memory block is mapped only to one cache block as selected by the index bits.Īnalyzing the address trace gets: EDIT bugfix Address Index Tag_Table Before / AfterĠ010 10 10 / 00 -> Miss, because another tagġ010 10 00 / 10 -> Miss, because another tag There is no further search because we have direct-mapped cache. Otherwise, we have a miss and the block is loaded from memory into the cache at the indexed block and the tag line is updated. If the tag stored at the particular index matches that of the word address, then we have a hit. The tag memory (table) is addressed with the same index. The cache block 1 could store the memory blocks 1, 5, 9 and 13 because the index is binary "01" here. The cache block 0 could store the memory blocks 0, 4, 8 and 12 because the two least significant bits (= index) is binary "00" in all these memory block addresses. The memory has \$2^4=16\$ words and is thus divided into 16 blocks. This leaves the upper two bits for the tag. These bits are also called the index bits. It is not clearly stated in the question which two bits out of the four address bits to use, but usually the lowest significant bits (above the non-existing byte select bits) of the word address are used. Two bits of the word address are directly taken to address these 4 blocks, that's it, why this is called a direct mapped cache. (In this machine the smallest addressable unit is a word of 4 bytes.) Each cache block (4 bytes) stores one word (4 bytes). The remaining bits are stored along with the block as the tag which locates the block’s position in the main memory.Your cache has 4 blocks of 4 bytes per block each => cache size is 16 bytes.

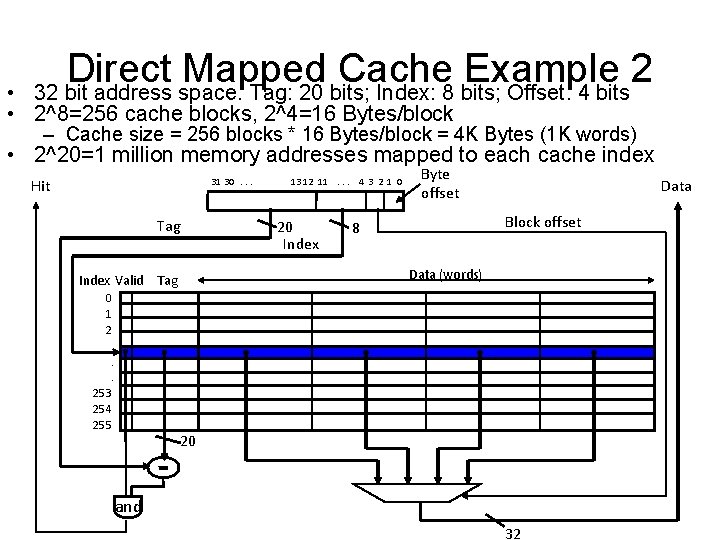

The line bits are the next least significant bits that identify the line of the cache in which the block is stored. The word bits are the least significant bits that identify the specific word within a block of memory. Just like locating a word within a block, bits are taken from the main memory address to uniquely describe the line in the cache where a block can be stored.Įxample − Consider a cache with = 512 lines, then a line would need 9 bits to be uniquely identified.ĭirect mapping divides an address into three parts: t tag bits, l line bits, and w word bits. The figure shows how multiple blocks from the example are mapped to each line in the cache. If a line is already filled with a memory block and a new block needs to be loaded, then the old block is discarded from the cache. Direct mapping is a procedure used to assign each memory block in the main memory to a particular line in the cache.

0 kommentar(er)

0 kommentar(er)